GNS3 has been an invaluable study tool. I am always a fan of buying the real hardware, but for larger topologies with medium to high levels of network redundancy, that may be cost-prohibitive. After I benched VIRL, I got back into GNS3 considering I was moving into the ROUTE portion of the CCNP. GNS3 is great but using it across multiple clients and maintaining several copies of topologies across those clients (combination of Windows and Ubuntu) was becoming time consuming. To resolve that, I decided to start saving all of my topologies to my ownCloud (FreeNAS) server. Deploying them and saving them from there was easy and I didn't have to maintain multiple copies of the same topology across several devices. I could build a topology on my Ubuntu desktop, make changes, close, and see those changes available in one of my Windows 10 VMs or on my Ubuntu laptop. That solved one issue. The other issue is that my topologies were growing in size. GNS3 is a lot better on system resources than VIRL but I was pushing the limits.

I decided to re-purpose a Supermicro server that I bought several years ago. I had since been using it as an ESXi test server along side my primary ESXi host. I loaded Ubuntu Server 16.04 on it and installed GNS3 server. Installing GNS3 server on Ubuntu Server takes less than 15 minutes and was easily done via these instructions that I was able to find in a quick search. The instructions note that GNS3 runs on port 8000 but GNS3 changed the default port to TCP 3080 several months ago.

In any case, running GNS3 on a dedicated server has proven to have numerous advantages. First and foremost, this reduces the load on a standard or even higher end desktop or laptop. I'm able to run much larger topologies on the server with a hyper-threaded quad core Xeon and 24GB of DDR2. It's an older server but it gets the job done.

Below is a sample of one of my CCNP topologies. This one simulates an EIGRP network composed of an enterprise core, WAN edge, several branch offices connected over Frame-Relay and Ethernet links, an Internet edge running iBGP and eBGP, and simulated ISP routers. GNS3 has come a long way from just supporting older 1700, 2600, 3600, 3700, and 7200 series routers. GNS3 has a marketplace that allows you to integrate several types of appliances including Cumulus Linux VX switches, various load balancers, VyOS, several Linux virtual machines (that serve as excellent endpoints), ntopng, Openvswitch and much more via Qemu virtual machines. It's amazing that I don't hear more about these features. One of the best to me is that you can use the same images used in VIRL (if you have a valid subscription and access to the images) to use IOS-XRv, ASAv, IOSv, IOSvL2, CSR1000v, and NX-OSv. You basically get VIRL with lower resource consumption.

The edge and ISP routers in this topology are Cisco IOSv VMs while the core is IOSvL2 (basically an L3 switch), and the WAN aggregation are traditional 2600, 7200, and 3600 series IOS images. This is a medium-sized topology with several Linux virtual machines running as network hosts/clients to test communication outside of IOS. My physical server has two interfaces, so I use one for management and one that connects to the cloud for routing traffic from my virtual network to my physical network. My GNS3 network devices and clients receive NTP updates, DNS, and even has regular internet access through this interface. I connect both edge routers to a dumb switch in GNS3 that allows both to uplink off my physical Catalyst 3750 core switch. They run eBGP to simulate a real Internet connection and I redistribute those routes into my physical core's OSPF process to route traffic to my upstream Internet gateway. It really is amazing what you can do with GNS3 now.

Another feature that I think is not getting enough attention is the fact that the integration of VIRL images allows for a good bit of switching features but definitely not 100%. For instance, you can set up HSRP but any connected hosts are unable to communicate with the virtual IP. They can communicate within the same VLAN and traffic can be routed to other interfaces but only using the VLAN IP. When it comes to switching, you don't get anything more than you would get in VIRL.

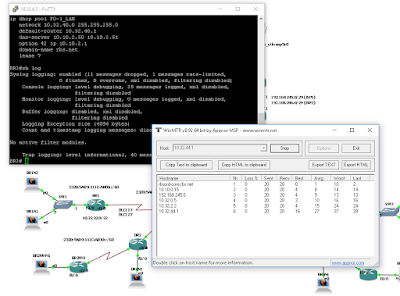

Below are more screenshots of the same EIGRP lab. It's a dual-stack lab (IPv4 and IPv6) but routing protocols are only being applied to IPv4 currently. I was testing IPv6 for more basic features like link-local communication, EUI-64, DHCPv6 (to hosts), NDP, and understanding router advertisements and solicitations.

|

| ICMP communication from a GNS3 VM to the actual Internet. |

|

| Routing table of GNS3 WAN router showing EIGRP-advertised default route. |

|

| Telnet session from physical PC to GNS3 router and MTR session. |

|

| GNS3 ISP transit-router showing BGP routing table. |

For ROUTE, GNS3 is proving to be one of my most invaluable tools, especially with all of the new features added. I can't recommend it enough to anyone currently studying routing concepts. If GNS3 ever gets switching integrated 100%, it will truly be a network student's Swiss army knife. For the time being, it is more than sufficient for routing labs. I also highly recommend setting up a GNS3 server where possible. I tried deploying a GNS3 VM in several forms on ESXi and it was an insatiable CPU devouring vampire, no matter how much I tweaked the Idle-PC settings or adjusted resources in ESXi. It simply required more than I could give it as a VM on the CPU-front. I'm sure some more knowledgeable ESXi expert could get it to work much better, but I shamelessly went bare metal. Overall, my GNS3 server is proving to be an excellent investment in my studies.

Have a similar experience? Have recommendations? Feel free to comment.